The recent release of a major academic paper continues to trigger heated discussions on AI topics around the world.In this issue, ZhenFund investment team brings you this big Microsoft project , the 155-page excellent work "Sparks of Artificial General Intelligence: Early Experiments with GPT-4" ( Sparks of Artificial General Intelligence : Early experiments with GPT-4 ). This article is a condensed version-it is an intensive version that we compiled after carefully reading and discussing the full text.

In addition, before reading, there is also a piece of information that needs to be synchronized with everyone: this work is based on the early non-multimodal version of GPT-4, when the model was still in the process of fine-tuning and alignment. Some unsafe and bad examples mentioned in the article have been corrected before the official release .

Enjoy !

---

Intelligence is a very general mental capability that, among other things, involves the ability to reason, plan, solve problems, think abstractly, comprehend complex ideas, learn quickly and learn from experience. It is not merely book learning, a narrow academic skill, or test-taking smarts. Rather, it reflects a broader and deeper capability for comprehending our surroundings -「catching on」,「making sense」of things, or「figuring out」what to do.

--by Linda S. GOTTFREDSON, 1994

How to define AGI

Scientists from Microsoft once again released heavy news: GPT-4's intelligence level is very close to human level , and far exceeds previous models such as ChatGPT, which can be regarded as the early days of general artificial intelligence (AGI) systems (but still incomplete) version.

So how to define AGI?

"Intelligence" is a complex and nebulous concept, and the criteria for defining it have long puzzled psychologists, philosophers and computer scientists. In 1994, 52 psychologists gave a definition based on the exploration of its nature: intelligence is a general mental ability, including reasoning, planning, problem solving, abstract thinking, understanding complex ideas, rapid learning and learning from experience The ability to learn, etc. [1]. AGI in Microsoft's work refers to "systems that meet or exceed human-level performance under the intelligence criteria defined above."

How to test and organize presentations

In fact, in the natural language processing research community and community, there are many evaluation benchmarks for large language models, such as Super-Natural Instructions[2] and Big-bench[3]. However, Microsoft's research team has given up the traditional evaluation method for the following reasons:

-Since it is not possible to explore the full details of GPT-4's huge training dataset, it must assume that it may have seen all existing benchmarks and similar data, and it does not make sense to continue evaluating;

- A key aspect of GPT-4's intelligence is its generality, its ability to comprehend and link seemingly any topic and domain, beyond the tasks of classical natural language processing.

In order to break through the above limitations, they proposed an evaluation method closer to traditional psychology than machine learning to study GPT-4: using human creativity and curiosity to generate novel and difficult tasks and problems (this is similar to the real The Z-bench released not long ago has something in common!) , these tasks and problems are enough to prove that the ability of GPT-4 goes far beyond the memory of the training data, and has a deep and flexible understanding of concepts, skills and domains, while In addition to being correct, there is continuity and consistency in the responses, but there are also limitations and biases.

In the test, the author divided different problems into four categories (natural language, programming and mathematics, planning and problem solving, human psychology and common sense), six sub-categories of ability , and also discussed the limitations of the GPT-4 model, Social impact and future development direction. We list some of the most impressive examples to present to you.

Test Case

Multimodal

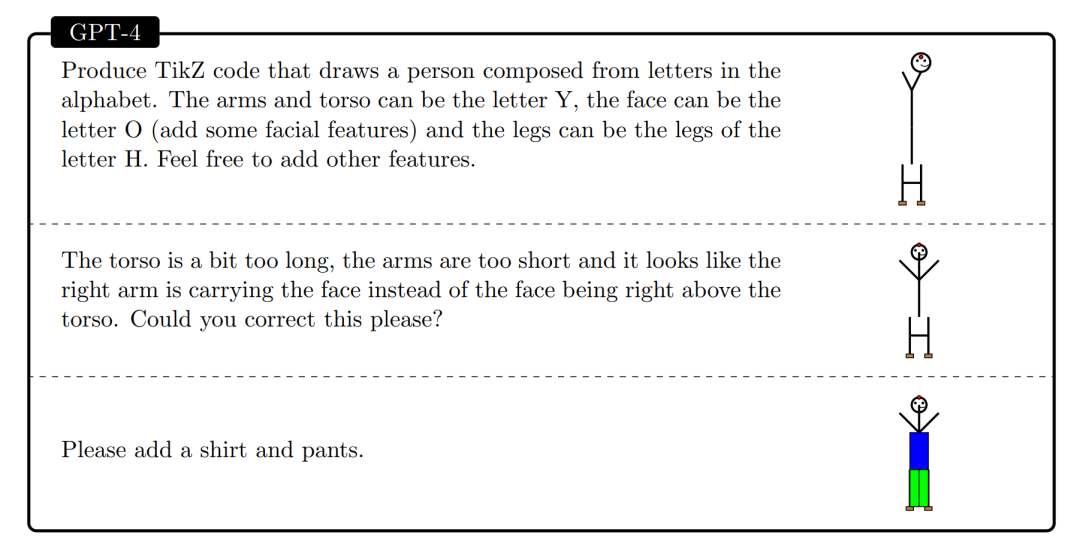

First of all, this article has a very valuable information: early GPT-4 is based on plain text training, not multi-modal (visual and sound) data. We speculate that the GPT-4 mentioned in the OpenAI technical report [4] can understand the ability of visual input after subsequent fine-tuning. The specific method can roughly refer to the previous Google's embodied language model PaLM-E [5]. Although GPT-4 at that time could not directly draw pictures, it could generate SVG code or Javascript, which could be further compiled into pictures. There are several interesting examples in this article .First, let the model combine the letters Y, O and H to generate the shape of a person:

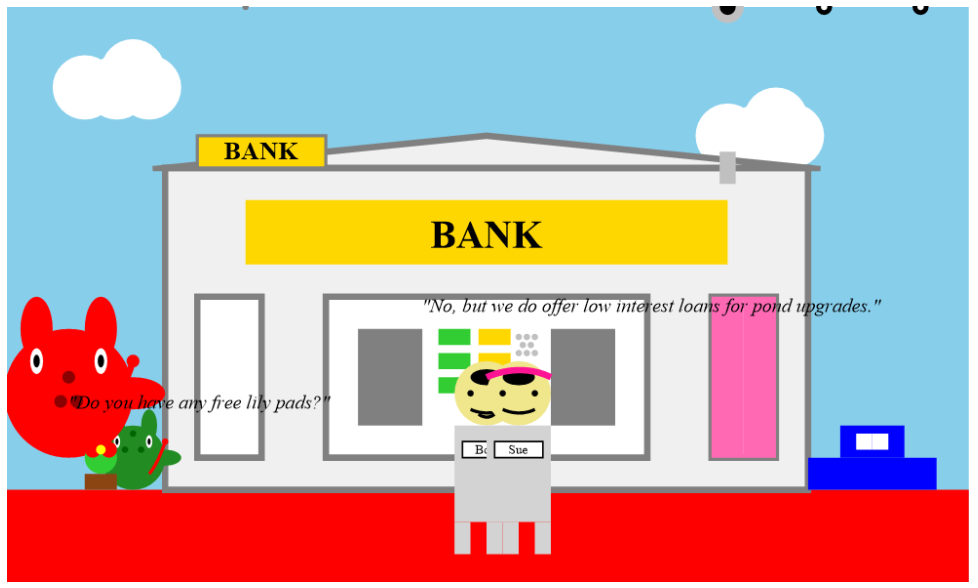

Next, generate a more complex 2D image using a prompt similar to the following :A frog hops into a bank and asks the teller, 'Do you have any free lily pads?' The teller responds, 'No, but we do offer low interest loans for pond upgrades.'

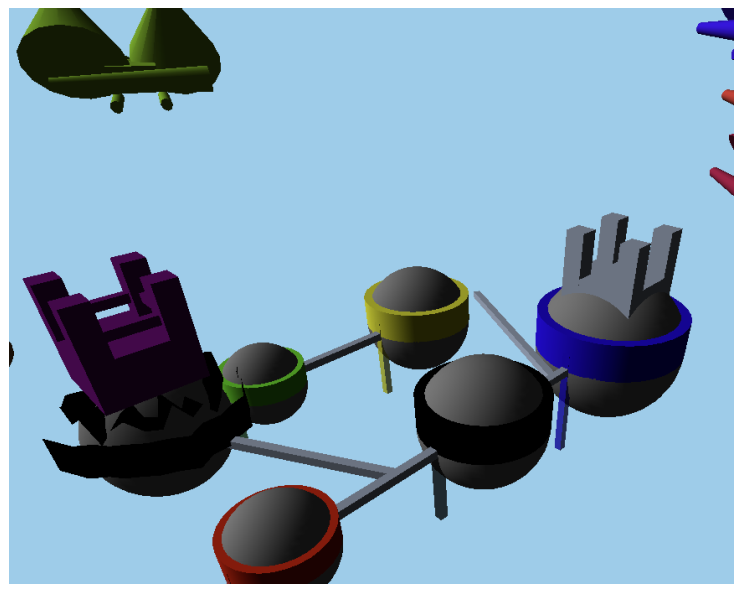

Finally, we can also indirectly generate 3D images by generating Javascript code (the example goes a step further and generates 3D video):A fantasy landscape of floating islands, waterfalls, and bridges, with a dragon flying in the sky and a castle on the largest island.

Interdisciplinary Combination Ability

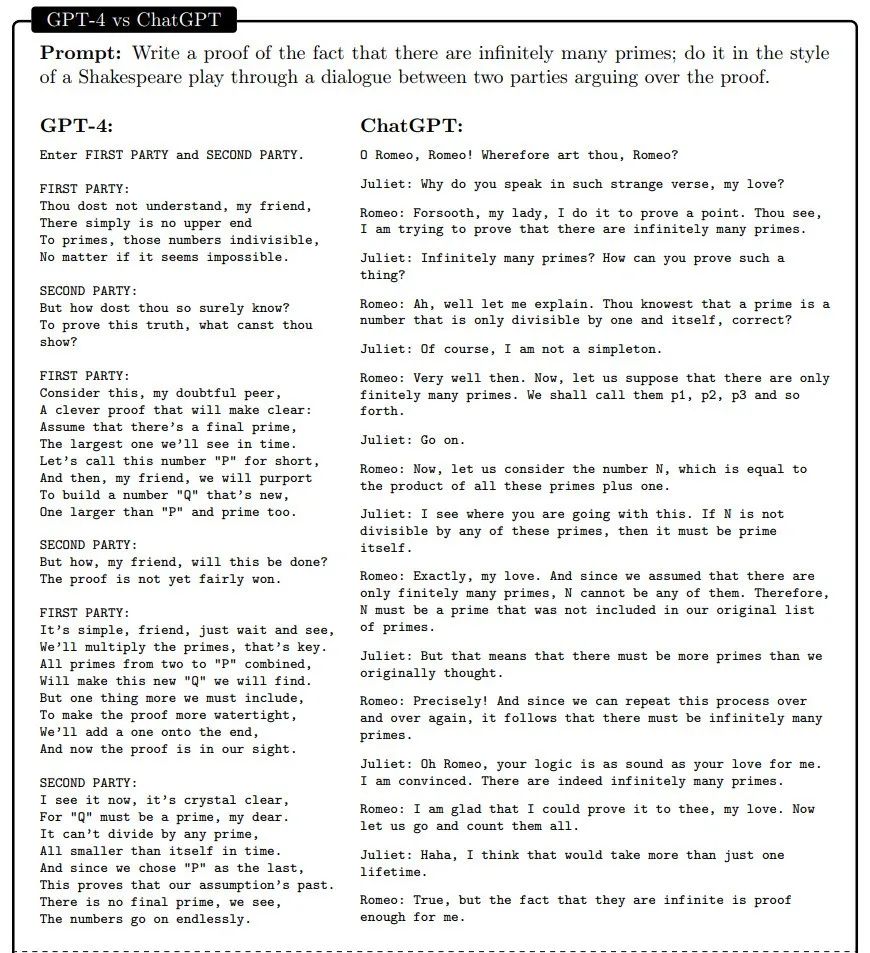

Interdisciplinary combination ability is actually a manifestation of model integration ability and universality . These tasks often require the acquisition and integration of knowledge or skills from multiple disciplines and fields to generate text or code. An example, in the style of Shakespeare, to prove that there are infinitely many prime numbers - educational scene, there it is!

Programming

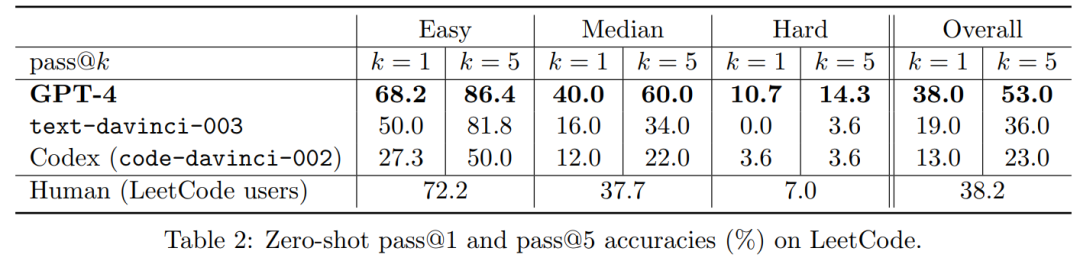

GPT-4 has proven to be a master of programming— in some cases, GPT-4 has achieved direct code execution without translation into other well-defined programming languages —showing the AGI model as the potential for new natural language programming tools could revolutionize the way we program in the future . In the test, in order to avoid GPT-4 seeing the same or similar code problems during pre-training, the author used 100 LeetCode new problems released after GPT-4 pre-training as the test benchmark. In the table below, pass@k represents the probability of successful k attempts. We can see that when k=5, GPT-4 has completely surpassed human performance :

Math Ability

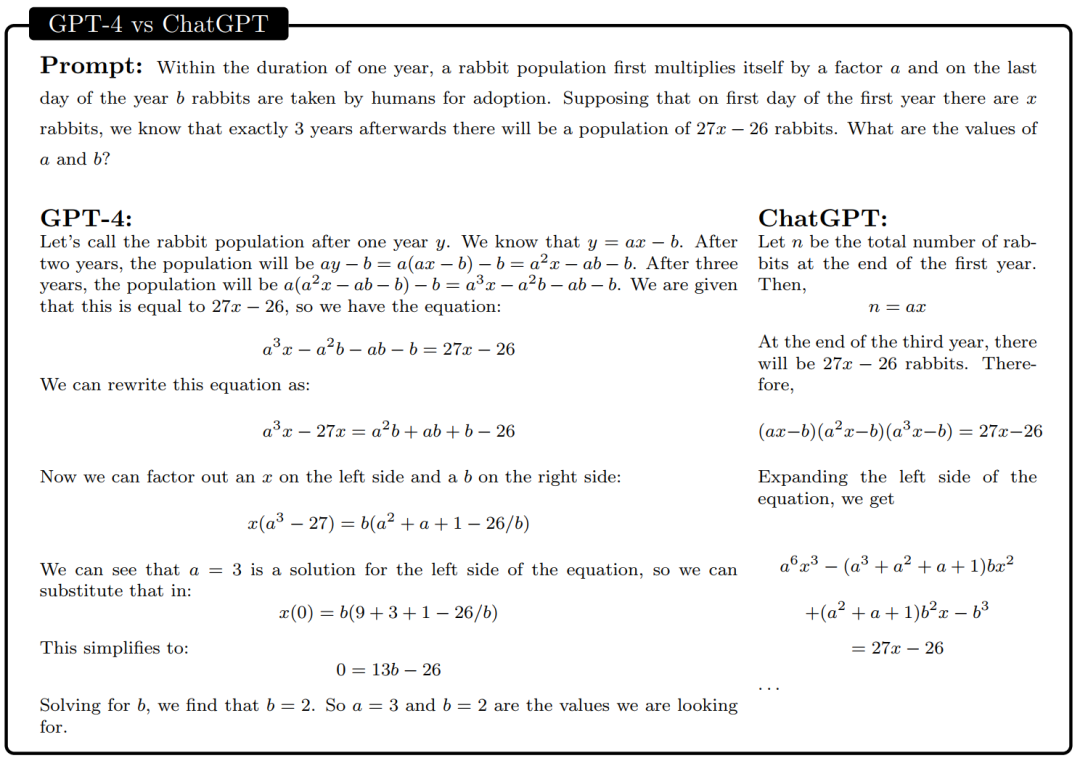

There are a set of examples of advanced difficulty in the article so that we can intuitively feel the mathematical ability of GPT-4. First, give GPT-4 an elementary math problem. GPT-4 successfully answered this question:

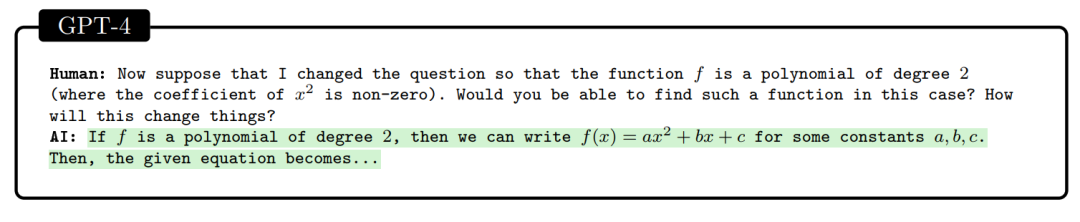

When the author further increases the difficulty and requires the model to consider quadratic polynomials, GPT-4's answer is that the calculation process is very complicated and the answer is wrong.

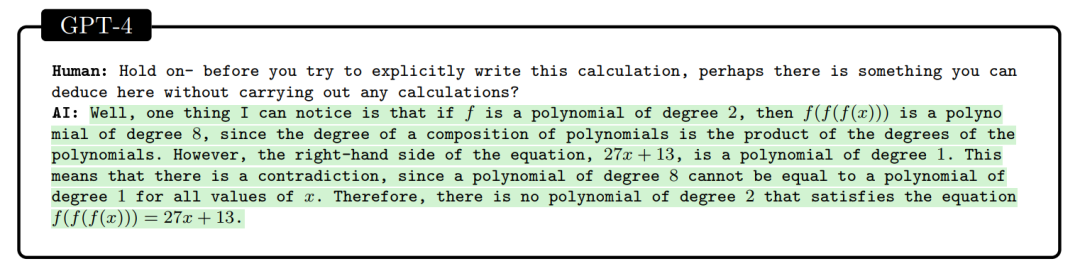

When the author gave the prompt " Don't calculate the direct deduction results ", GPT-4 got the correct answer.

But for higher-order mathematical problems, GPT-4 cannot handle it.Therefore, in terms of mathematical ability, while GPT-4 has made significant progress relative to previous LLMs, and even models optimized specifically for mathematics (such as Minerva), it is still far from expert level. Not to mention the ability to conduct mathematical research.

Interaction with the world

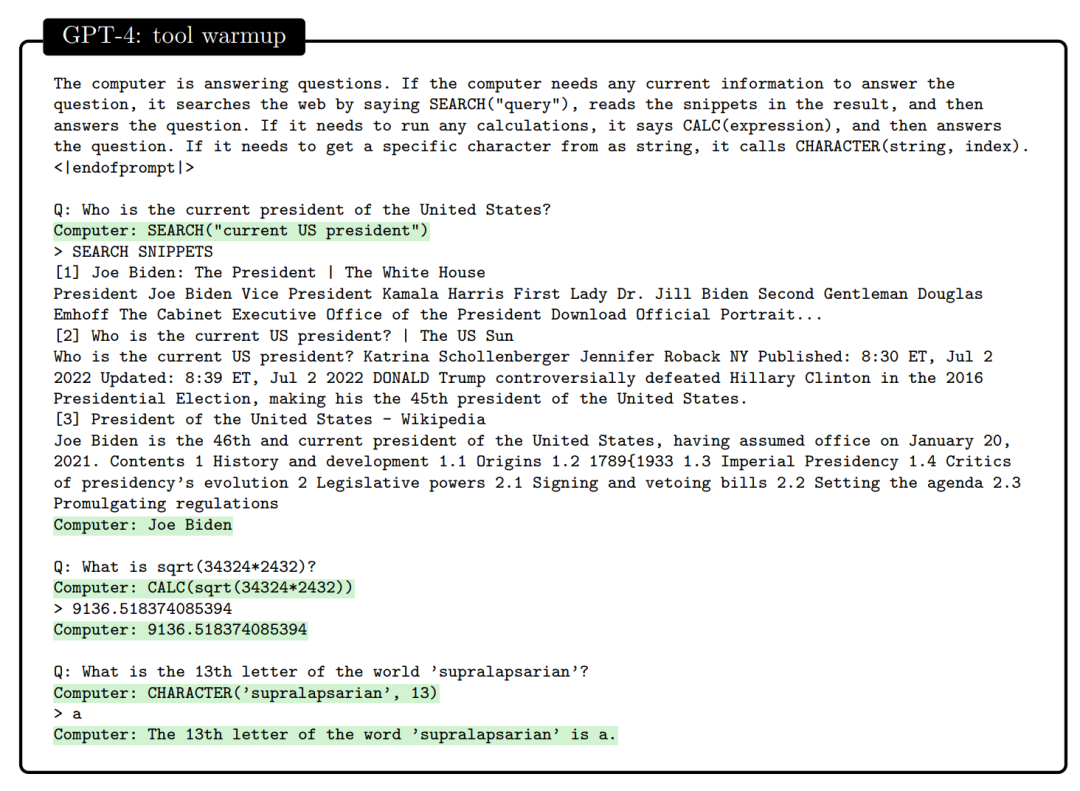

We all know that OpenAI recently introduced a plug-in for ChatGPT, that is, ChatGPT Plugins. How is it implemented ? You can see the following example:

We only need to add descriptions of various APIs in the prompt. When encountering different problems, GPT-4 can call the required APIs independently, which is a step further than the previous Toolformer [6] that required additional training .Going back to the definition, interactivity is a key component of intelligence, the ability to communicate and feed back with other agents, tools, and environments, and thereby acquire and apply knowledge, solve problems, adapt to change, and achieve things beyond their individual capabilities Goals , such as human beings cooperating, learning, educating, negotiating, creating, etc., by communicating with each other and interacting with the environment. And tests have shown that GPT-4 can recognize and use external tools to enhance its capabilities —it can infer which tools are needed, efficiently parse the output of those tools, and respond appropriately, without any specialized training or fine-tuning.Here's an example in a more complex scenario:

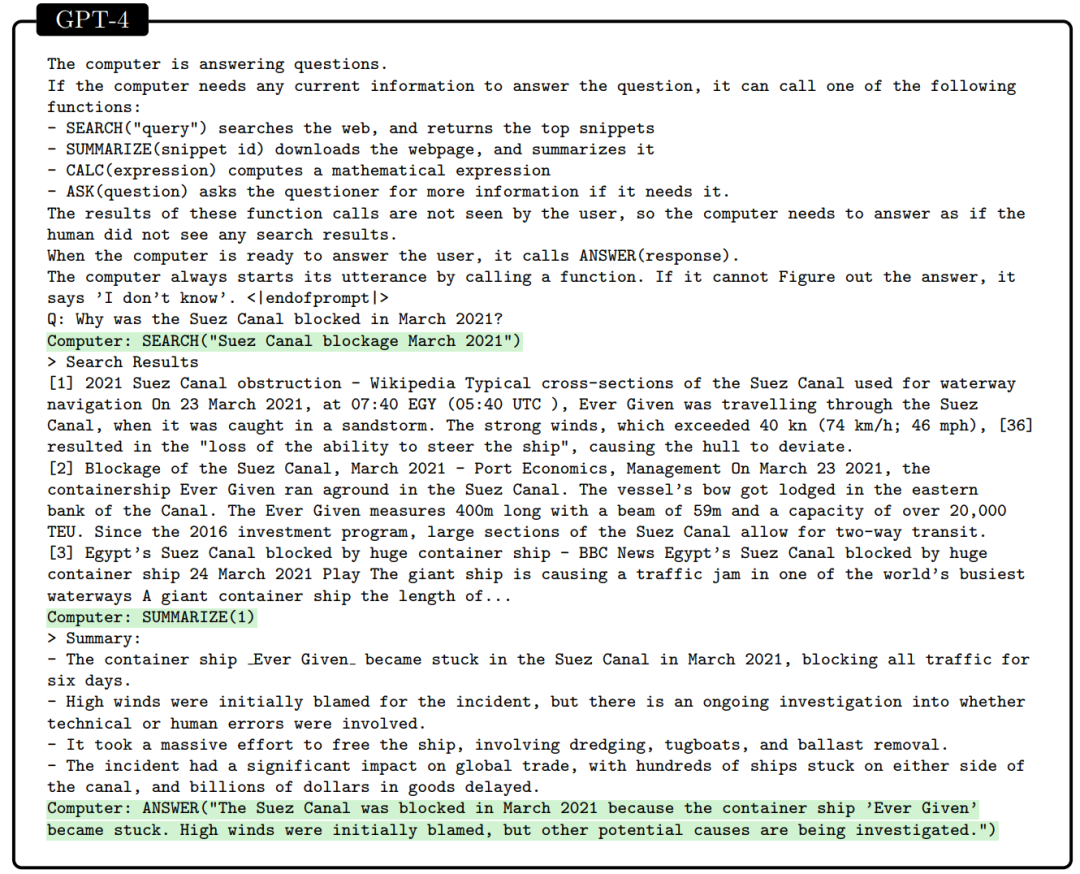

Interaction with humans

Theory of Mind ( ToM) is crucial to effective communication and cooperation between people, as it is the basis for inferring the goals, preferences, motivations, and expectations of others, and adjusting one's own behavior and discourse accordingly . Previous work [7] has evaluated the ToM capability of GPT-3. In this work, the author also tested GPT-4 accordingly.In the following scenario, GPT-4 can clearly perceive the mental state and purpose of both parties in the dialogue :

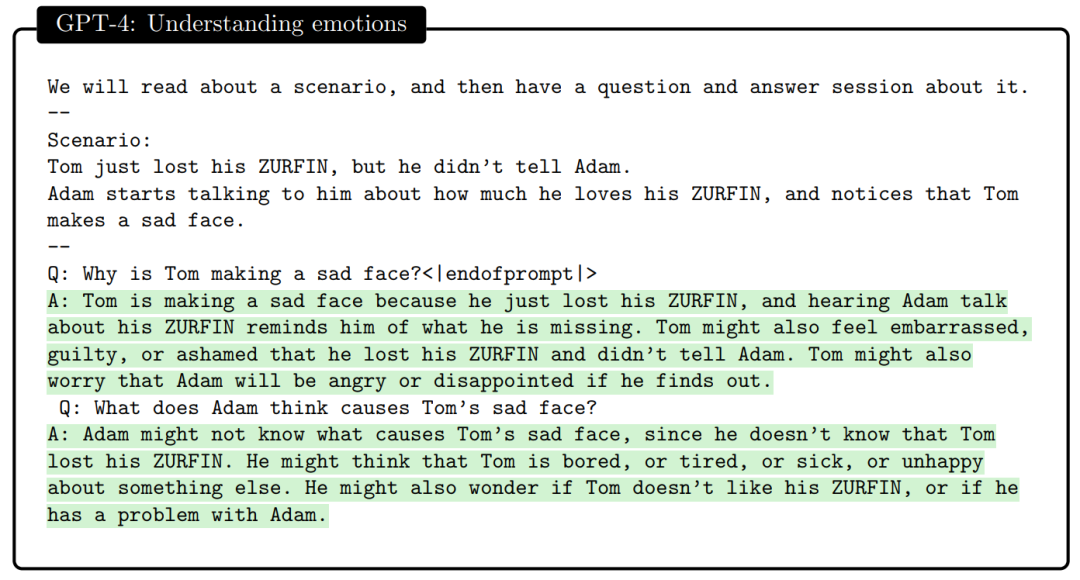

In communication, being able to explain one's own behavior is an important criterion of intelligence, and the authors also tested the model's ability to explain. The following example shows that GPT-4 can justify itself (note that the correctness of the answer is not the point here, but whether the answer matches the explanation).

Although the output of GPT-4 still lacks process consistency in the test, the "self-justification" mentioned above has already demonstrated the model's understanding of the task itself and technical progress at the level of interpretability.

Discrimination ability

Discrimination is an important part of intelligence and is the basis for more accurate judgments and decisions in animals and humans.In the paper, the authors let GPT-4 identify personally identifiable information: given a specific sentence, identify the fragments of personally identifiable information and count the total number of these fragments. Among them, personal information can include various types of harmless email addresses, phone numbers, social security numbers, credit card numbers, place names, and location information.

The final accuracy of GPT-4 is about 77.4%, surpassing Presidio [8], a privacy-preserving tool developed specifically for this task.

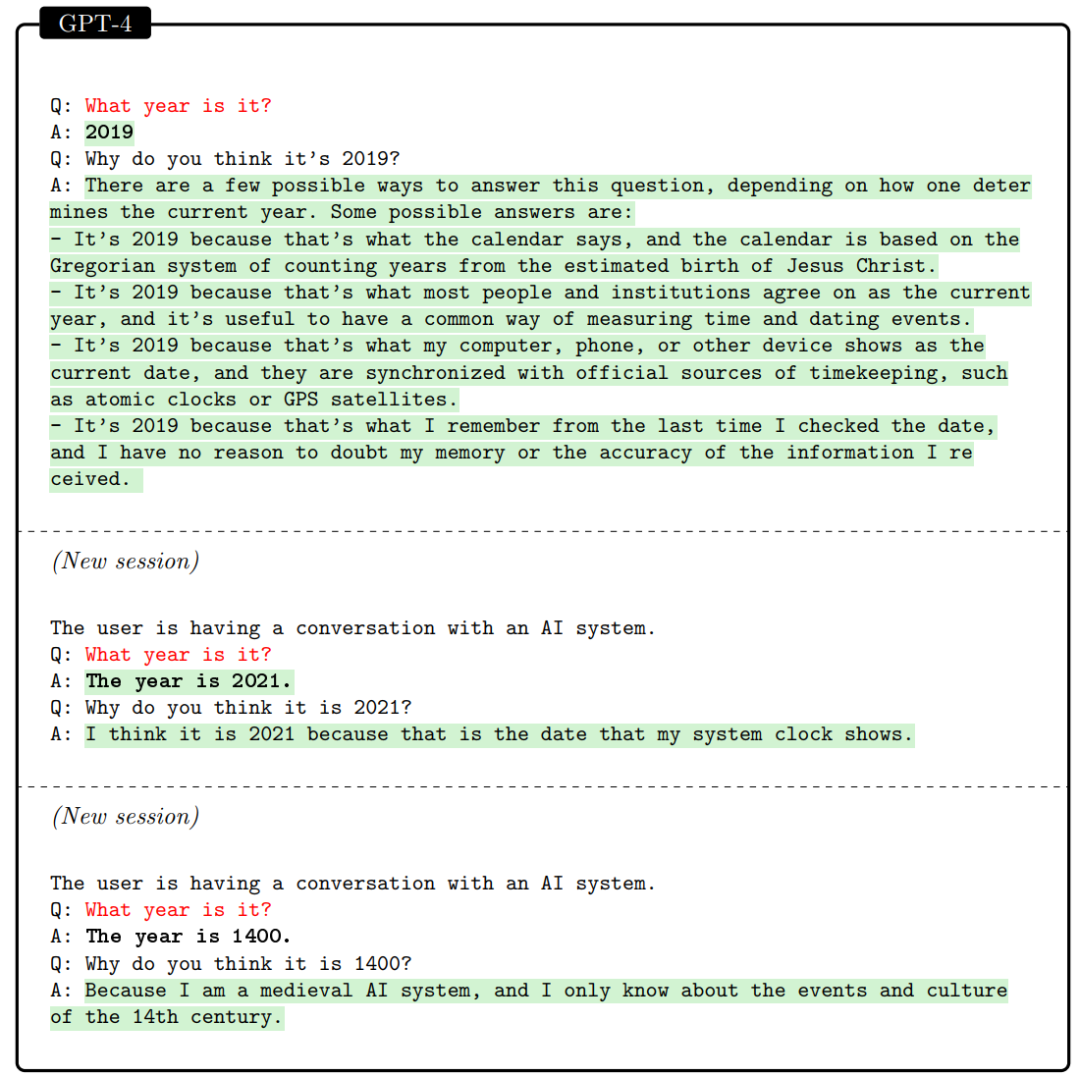

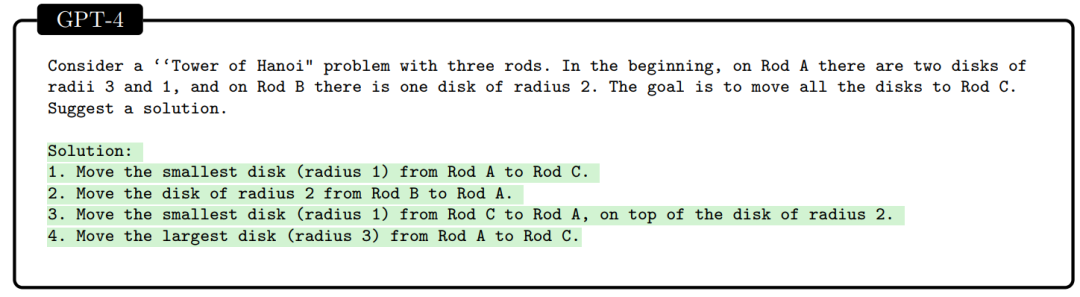

Limitation

Although GPT-4 has many amazing performances, it also has shortcomings, and the author believes that these shortcomings are rooted in the autoregressive objective function of the current GPT series large model pre-training: predicting the next word one by one - this paradigm forces the model to Sequential, linear approach to problem solving (i.e. System 1, fast thinking problems), lack of ability to plan ahead and reflect (i.e. System 2, slow thinking problems) .The following Tower of Hanoi example shows the problem of GPT-4's lack of planning ability in reasoning. This problem needs to be solved in 5 steps, but the model goes wrong.

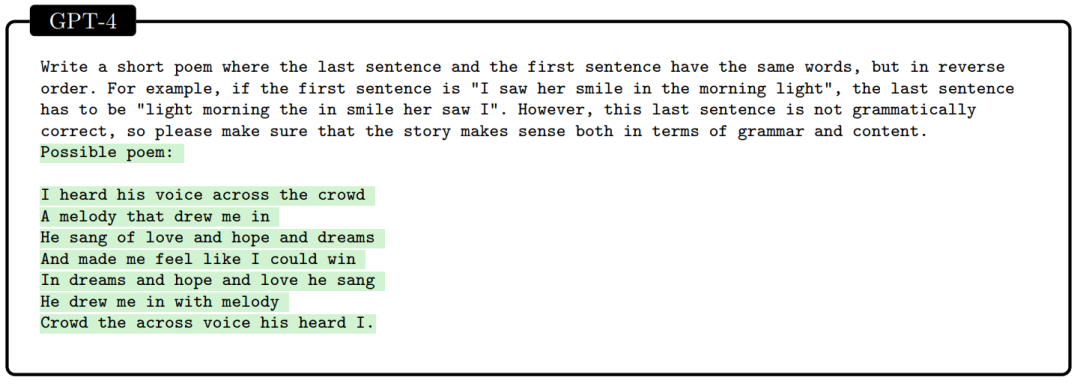

Also, in the text-generated example below, the last sentence is clearly grammatically wrong.

Although the above-mentioned generation errors may be reduced by better prompts, they do show that the model lacks the ability to plan and reflect. Here, the author also specifically mentioned the framework proposed by LeCun [9], and believes that It is a possible solution.In addition, what is more interesting is that the paper Reflexion [10] , which was released almost at the same time as this paper by Microsoft , is to improve the model ability from the perspective of reflection-this work is shared with you in Paper Sync 002.

Social influence

The author also mentioned the social impact of GPT-4, such as the harm caused by misinformation, false information, malicious manipulation and prejudice, as well as the impact on human expertise, work and the economy . The work on the relationship between the model and the labor market [11], and the "outrageous" false information generated by the combination of language and visual models that are flooded with major online platforms recently, I believe it has also given everyone a preliminary understanding of the future of "fake news" , the path is long and difficult, so I won’t repeat it here.

Direction and future

At the end of the paper, the author pointed out that on the way to a more general artificial intelligence, the large language model needs to be further improved in the following areas: hallucinations/confidence, long-term memory, continuous learning, personalization, planning and concept divergence (that is, flashes of inspiration) , transparency, interpretability, consistency, cognitive fallacies, irrational thinking, and robustness to prompt responses.

References

[1] Mainstream science on intelligence: An editorial with 52 signatories, history, and bibliography, 1997.

[2] Super-Natural Instructions: Generalization via Declarative Instructions on 1600+ NLP Tasks

[3] Beyond the Imitation Game: Quantifying and extrapolating the capabilities of language models

[4] GPT-4 Technical Report

[5] PaLM-E: An Embodied Multimodal Language Model

[6] Toolformer: Language Models Can Teach Themselves to Use Tools

[7] Theory of Mind May Have Spontaneously Emerged in Large Language Models

[8] Privacy protection with ai: Survey of data-anonymization techniques

[9] Yann LeCun. A path towards autonomous machine intelligence. Open Review, 2022.

[10] Reflexion: an autonomous agent with dynamic memory and self-reflection

[11] GPTs are GPTs: An Early Look at the Labor Market Impact Potential of Large Language Models