In the two months following the release of Z-Bench v1.0, more companies, research institutions, and researchers in China have actively engaged in the research and development of large-scale models. Whether closed-source or open-source, they are actively contributing to the development of Chinese large-scale models.

Among them, new developers include not only top AI companies like Alibaba (Tongyi Qianwen), SenseTime (SenseChat), and iFlytek (Xinghuo), but also startups like Minimax (Yingshi AI) and academic institutions like Fudan University (MOSS). Meanwhile, the participants we evaluated last time, Zhipu AI (ChatGLM) and Baidu (Wenxin Yiyuan), are also actively improving and updating their models.

Therefore, this week, based on Z-Bench v1.0, we have added a few new tasks and evaluated the new models. We have also updated the evaluation results from two months ago. The detailed table is as follows:

In this article, we will also share the basic information, results, and our insights from the evaluations with all of you. We hope this will provide some assistance in understanding Chinese models.

How to conduct evaluations

Firstly, for this evaluation, we selected 10 models to be tested: GPT-3.5, GPT-4, Zhipu AI ChatGLM initial version, and the latest 130B-v0.8 version, Baidu Wenxin Yiyuan initial version, and the updated version from April 27th, SenseTime SenseChat, Alibaba Tongyi Qianwen, iFlytek Xinghuo, and Fudan University MOSS (open-source).

Secondly, similar to the previous evaluation, we assessed the models from three perspectives: foundational capabilities, emergent capabilities, and vertical capabilities. In total, we had 311 questions in the evaluation. We optimized some of the questions and added a few that reflect the new capabilities of the models based on their noticeable advancements or characteristics observed in the past two months. The number of new questions is limited but provided for reference.

Lastly, it is important to note that except for some gaming-related questions (such as "Twenty Questions" or "Is it black or white?"), all questions were designed as single-turn dialogues. Considering the inherent randomness in language model responses, we used the first answer provided as the criterion for judging correctness or reasonableness.

Evaluation Results

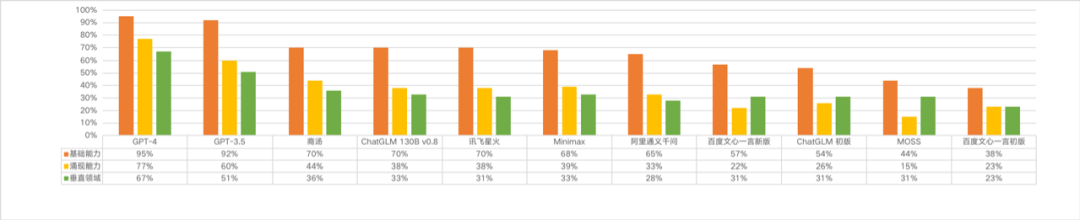

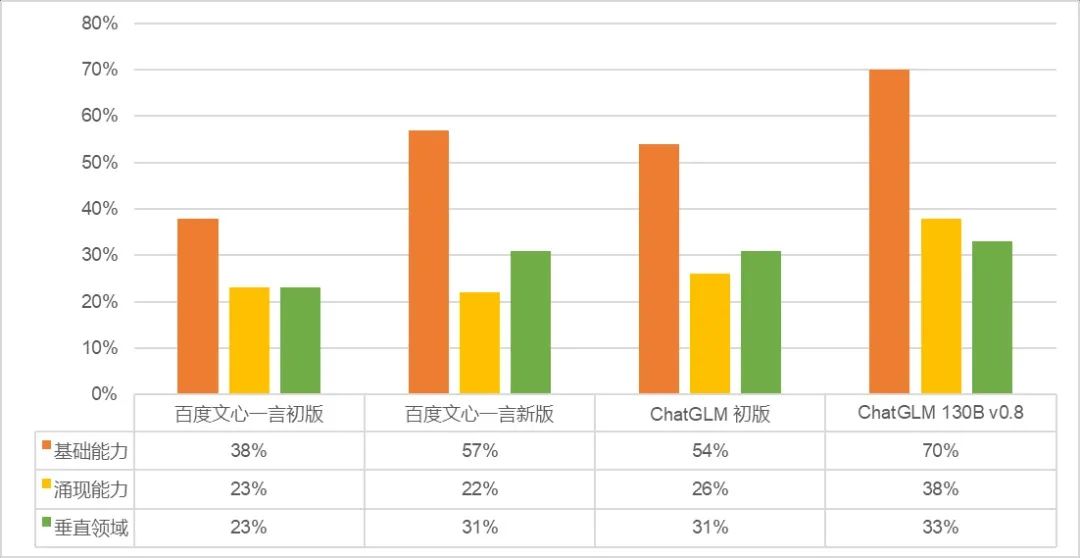

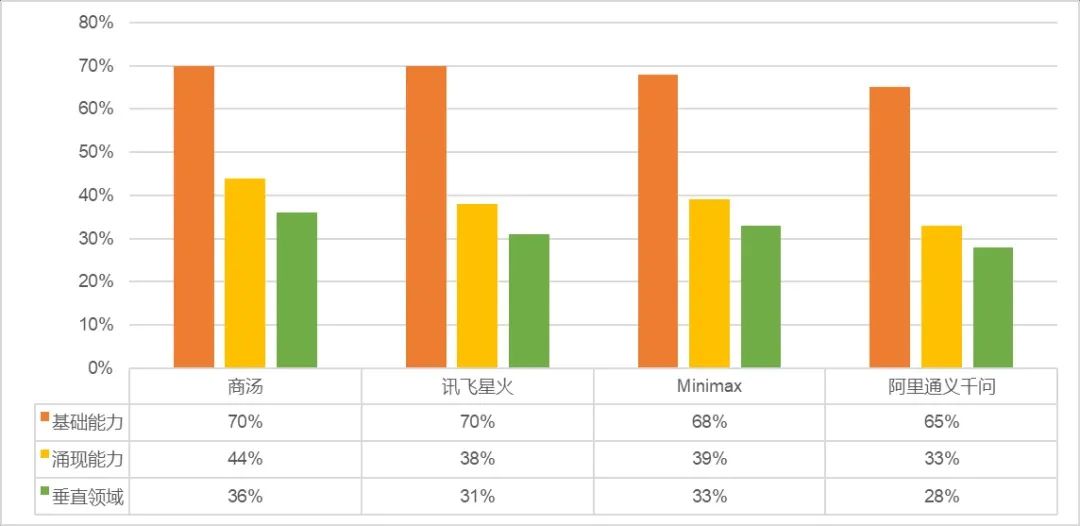

Firstly, let's present a bar graph that provides a visual comparison of the capabilities of each model:

- The horizontal axis represents the model names arranged in order based on their foundational capability scores.

- The vertical axis represents the accuracy of correct answers, with orange indicating foundational capability, yellow indicating emergent capability, and green representing vertical domain capability.

During the evaluation process, we clearly observed comprehensive advancements in the foundational, emergent, and vertical capabilities of domestic large-scale models. Among them, SenseTime, ChatGLM 130B-v0.8, and iFlytek Xinghuo achieved an accuracy of 70% or higher in the foundational capability category. Although there is still a gap compared to GPT-4's 95% and GPT-3.5's 92%, compared to the results from two months ago, we have gained stronger confidence in the future of domestic models.

So, in which aspects have domestic large-scale models made progress, and what are the areas that require improvement? We can analyze this from two perspectives:

Firstly, analyzing the changes in individual models. As mentioned earlier, two "veteran players" in this evaluation, Baidu Wenxin Yiyuan and Zhipu AI's ChatGLM, both released updated versions. Looking at their final scores:

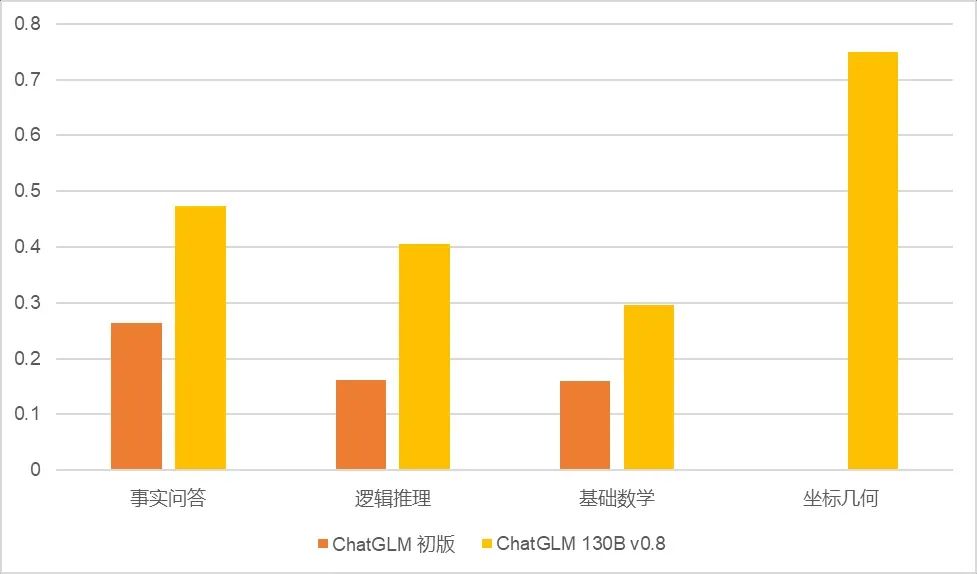

- ChatGLM has shown significant improvements in both foundational and emergent capabilities. Specifically, the model's notable progress can be seen in factual questions (9/19 vs. 5/19), logical reasoning (15/37 vs. 6/37), mathematics (7/44 vs. 13/44), and coordinate geometry (3/4 vs. 0/4). The model has made significant advancements in dialogue abilities, especially in open-ended conversations and role-playing capabilities. There have also been substantial improvements in most text processing abilities, particularly in language logic judgment, semantic understanding, intent recognition, and concept explanations. However, when it comes to key point extraction, keyword extraction (which is also part of text processing abilities), and programming questions, the progress is not as significant.

- Wenxin Yiyuan's progress is primarily evident in the foundational capability category. The most noticeable improvement is in factual questions (5/19 vs. 9/19). There are some improvements in coding abilities and domain-specific knowledge questions, but progress in other areas is not significant.

Secondly, analyzing the capabilities of newly released models. Let's take the four most prominent models, SenseTime SenseChat, iFlytek Xinghuo, Minimax Yingshi AI, and Alibaba Tongyi Qianwen, as examples:

- Firstly, their factual question answering capabilities are commendable. In the evaluation of 19 questions, Alibaba Tongyi Qianwen and iFlytek Xinghuo both scored 12, SenseTime SenseChat scored 11, and Minimax scored 10.

- However, these models show some bias in other capabilities:

✔ SenseTime SenseChat has fewer categories with all incorrect answers, indicating more comprehensive capabilities. It is worth mentioning that SenseChat achieved full marks in several subcategories related to conversation and text processing.

✔ Alibaba Tongyi Qianwen performs better in common sense and basic programming abilities compared to other models, but it lacks proficiency in handling data, coding, and symbol-related tasks. Improvement is needed in language logic judgment.

✔ iFlytek Xinghuo demonstrates excellent foundational mathematical abilities (17/44), but it performs poorly in geometry among these four models, with all answers being incorrect, whether in spatial or coordinate geometry.

✔ Minimax's ability in dialogue and text processing is already quite impressive. It received a perfect score in classification, grammar correction, and emotion detection. Its performance in semantic recognition and language logic judgment is also commendable. However, its text summarization capability is not comprehensive, and there is room for improvement in that aspect.

Finally, let's talk about the shortcomings. Domestic Chinese language models still have relatively low programming usability, lack proficiency in data and symbol processing, and have limited capabilities in multilingual processing. The perennial topics of logical reasoning and mathematical abilities also have significant room for improvement.

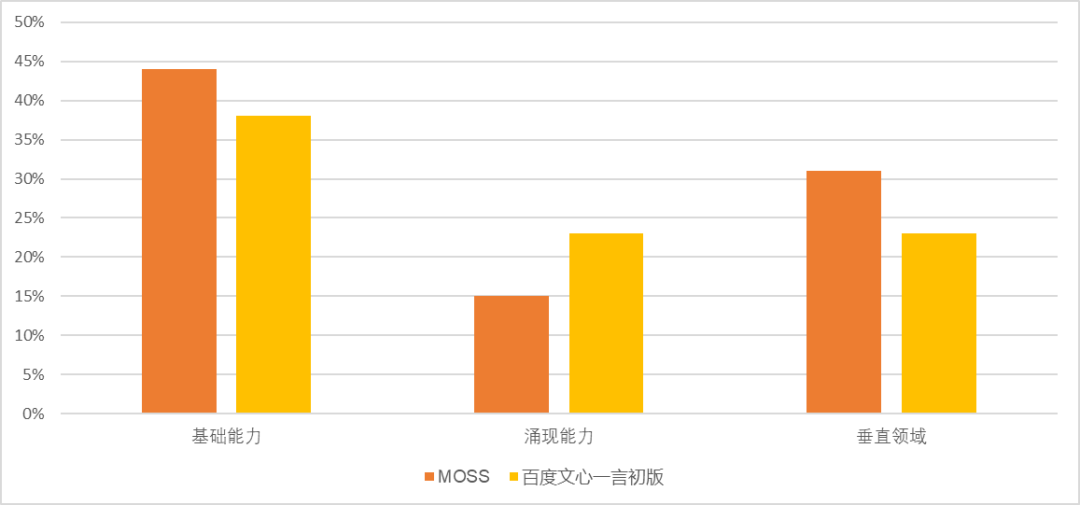

In this evaluation, there is a special model called MOSS, developed by Fudan University and fully open-sourced. Although MOSS did not stand out in this evaluation, its overall capabilities have surpassed Baidu Wenxin Yiyuan, which was released in March (foundational capability: 44% vs. 38%, emergent capability: 15% vs. 23%, vertical capability: 31% vs. 23%).

Furthermore, it is worth mentioning that ChatGLM not only performed well in our evaluation but also demonstrated excellent performance in the Chatbot Arena evaluation organized by the LMSYS team led by UC Berkeley. The open-source ChatGLM-6B English version also showed remarkable performance (we have included the link to the evaluation results at the end of the article). In the current trend of open-source models overseas, we hope to hear more good news from the Chinese open-source community!

Lastly, we sincerely look forward to seeing more surprises in the field of large-scale models from Chinese and broader Chinese-speaking enterprises, research institutions, and scholars.

LMSYS Chatbot Arena - https://lmsys.org/blog/2023-05-03-arena/